When Equations Learn

An Intuitive Guide to the Math of AI

A. P. Rodrigues

Names: Rodrigues, A. P., author. Title: When equations learn: an intuitive guide to the math of ai / by A. P. Rodrigues. Description: First edition. | Passo de Torres, SC, Brazil : Published by the author, 2025. Identifiers: ISBN 978-65-01-49720-4 Subjects: LCSH: Machine learning. | Artificial intelligence. | Neural networks (Computer science). | Computer science. | Mathematical models. Classification: DDC 006.3

This book is lovingly dedicated…

To Eduardo, whose thoughtful bibliographic suggestion first pointed me toward the world of artificial neural networks.

To Professor Giovane, for his kindness and thoughtful attention — and to his brother, Vinícius.

To Daniel, for the conversations, insights, and counsel that proved invaluable.

To my dear friend and true father figure, Marcus Maia.

To Fabiano and Laura, whose gratitude blossomed into remarkable generosity.

To Professor Carmen Mandarino, with deep appreciation.

And to the One who created the neuron — whose design inspired the very birth of the Perceptron.

Preface

This is a freely distributed e-book, but if you wish, you can purchase a physical copy on the author’s website. This book is about artificial learning and how this gift is bestowed upon one of the most fundamental structures of all neural networks: the Perceptron.

This book presents the very basics of artificial learning in neural networks. Anyone can use it to get a first contact with the fascinating world in which machines are capable of learning almost anything and simulating important aspects of human intelligence, such as seeing, reading, speaking, understanding what another human being says, and several other very useful capabilities that have been increasingly within everyone’s reach.

The first two chapters of this book used to make up another book, not yet translated into English, I had titled "The Most Basic of the Basic of the Basics on Artificial Learning". The present work is a formidable expansion of the previous one. Although the treatment and scope of the content in this volume can still be said to be quite basic, it goes deeper into machine learning and shows how to endow deep models with the ability to learn.

Artificial learning is the admirable secret behind the wonders we see nowadays in the most well-known AIs, such as, for example, ChatGPT or Gemini. Without it, these truly monumental byproducts of technology would not have been possible. To build an artificial intelligence, it is not enough to just know how to write code in a good programming language like TensorFlow or PyTorch. These very languages are the result of having mastered the understanding of how to make a machine, a software, or an equation grasp and retain what we would like to teach them.

However, learning is usually encapsulated in methods, functions, classes, etc., of those languages, and an excellent programmer never needs to come into contact with them, if they do not wish to, in order to describe in code the structure they want to build. In this way, the most precious gift of all artificial intelligence, in my opinion, remains somewhat hidden.

The concealment, which is partly a side effect of the automation of the programmatic layers responsible for learning, is not without reason. It greatly expands the number of people who are able to bring an idea to life through artificial intelligence, even without ever having known anything about how artificial learning works.

Unfortunately, the mathematics that describes the phenomenon of learning is not normally taught before the higher education level in Brazil. And, although it is not difficult and can even be considered old mathematical knowledge, Differential Calculus and the derivative of functions end up being unknown to a large number of people.

This entire book is about deriving functions! The functions in question are Perceptrons! But, perceptrons are functions of a somewhat more elaborate type. They are not real-valued functions. They are functions that involve matrices and vectors!

Artificial learning, which is based on derivatives, is based on a technique known as backpropagation. It is the right way to derive a vector-type function that is compositionally deep. This book is about this. This is the main content of this book.

What I present in this work is not the only important thing you will need to know about machine learning, but it is the indispensable part! Without this central core, artificial learning and the pieces of artificial intelligence mentioned above would not exist.

I wrote this book much like I myself would have wanted to read about the subject when I began to study it. I tried to show what is, in my opinion, the most important thing within the set of the most important things, showing, for example, the equations for the Perceptron’s structure and for the functioning of learning, in an explicit and to-the-point manner, so that anyone knows exactly how to code them, in their preferred language, as soon as they glance at them. This was what I would have liked to have found when I began to study the subject. An elementary treatment, accessible to beginners, single-themed regarding learning, and that would go from conceptualization, through detailed description, and all the way to application. This book, as already mentioned, focuses heavily on the descriptive stage.

In appendix Fundamental Topics in Neural Network Learning Not Covered in This Book, you can find a list of important topics in machine learning that were not covered, or were only mentioned, or insufficiently addressed in the present version of this book. It serves as a good initial thematic reference for those who wish to continue broadening their knowledge on the subject.

Finally, there are some codes that I wrote for this book and that I have made available in the notebook that is in the GitHub repository. It is quite possible that in the future even more material will be pushed there.

Happy reading!

- Preface

- Introduction

- 1. Shall We Start with a Little Game?

- 2. The Basic Description

- 3. Artificial Learning

- 4. Multiple Layers

- 4.1. The Propagation of a Signal x Through the Network’s Layers

- 4.2. A 2-Layer Perceptron

- The Equation of a 2-Layer Perceptron

- The Error Function of a 2-Layer Perceptron

- The Rates of Change of the Error

- Derivative of the Error with Respect to the Layer 2 Weights

- Derivative of the Error with Respect to the Layer 2 Biases

- Derivative of the Error with Respect to the Layer 1 Weights

- Derivative of the Error with Respect to the Layer 1 Biases

- 4.3. A Multi-Layer Perceptron

- 4.4. The Error Function of a Multi-Layer Perceptron

- 4.5. The Derivative of the Error with Respect to the Weights of Any Given Layer

- 4.6. Practical Process for Updating Weights and Biases

- 4.7. Analyzing the Dimension of the Matrices for \( \frac{\partial E}{\partial W^l}\) and \( \frac{\partial E}{\partial b^l}\)

- 4.8. Updating the Weights and Biases

- 4.9. Giving Life to Equations

- 5. Training in Batches

- Appendix A: Norm on a Vector Space

- Appendix B: The Derivatives of \( y_i\)

- Appendix C: Derivative of Vector Functions

- Appendix D: Some Observations on the Gradient

- Appendix E: Outer Product

- Appendix F: Continuous Learning

- Appendix G: The Cost Function over a Matrix Domain is a Norm

- Appendix H: Fundamental Topics in Neural Network Learning Not Covered in This Book

Introduction

The Perceptron is probably the most basic of all neural network architectures. Although it can be used on its own in small projects and for simple tasks, it is present—one way or another—in the vast majority of today’s most well-known and celebrated AI systems, such as OpenAI’s ChatGPT or Google’s Transformer.

I present the most basic mathematical structure of the Perceptron—its operation and, most importantly, its learning—in a quick and straight-to-the-point manner. The theory I cover here is only what’s indispensable for the presentation—no detours—of this fundamental piece of modern artificial intelligence. Thus, this book does not address the history of the Perceptron, nor does it provide any general statistical or analytical treatment of the concepts presented, nor does it explicitly cover matrix theory or concepts of Linear Algebra, etc.

A few demonstrations are included in the Appendices for interested readers, but only because they help to clarify important and foundational points regarding what the author believes to be the most important topic in this entire field: how a neural network is able to learn.

Thus, this book was written more to exhibit and operate with the basic formulas that provide primary and solid understanding of the subject, rather than to rigorously prove or demonstrate them. My main intention is to present and describe, with clarity, what is most basic—mathematically speaking—so that this initial contact may guide the interested reader toward a firm understanding of the subject, useful as a foundation for further or more advanced reading later on.

Neural networks and the Perceptron in particular are human inventions that were initially modeled on what was understood about how neurons work. It is a model that faintly mirrors the behavior of a living natural object. As distant as the Perceptron model may be from what we now know about the immense complexity of a real neuron, the model is nonetheless an enormous success.

Being a human invention, its most fundamental mathematical modeling is elegant and beautiful in the very sense that it is extremely simplistic. The reader will likely get this impression at various points in the book, particularly in the first chapter and in the parts that specifically address its structure.

The content presented in this book—especially from Chapter 2 onward—was created with the goal of enabling readers to use or adapt the same concepts when studying other neural network architectures and deep learning systems.

Modern languages and frameworks, such as TensorFlow, are based on and make use of the concepts of vectors and matrices. Perhaps one of this book’s merits is in explicitly showing the matrix nature of the equations that describe the Perceptron—and especially those that describe its learning process.

From experience, we know that building neural networks using tools like TensorFlow does not require programmers to have the in-depth understanding I present here, simply because this understanding is embedded as a key component, in a way hidden beneath a high-level, intuitive, and easy-to-use interface. However, the clear and explicit representation of the differential and matrix-based nature underlying artificial learning will provide the reader with an exact understanding of the beauty and power that are usually hidden from the general public, who are dazzled by the luminous results of such knowledge applications.

1. Shall We Start with a Little Game?

Let’s start this book with a little game!

One that you will remember for the rest of this book’s reading. Perhaps, for the rest of your life.

We are going to "transform" a sequence of numbers into the number \( \pi=3.14\). That’s right, you read it correctly: we are going to transform!

"But which sequence of numbers?", you might ask. Any one will do, I would answer! You choose yours!

Mine will be 1, 2, 3, 4!

But, I could have chosen any other, like 3, 2, 1, 0, -1, -2; or \( \frac{1}{2}, \frac{3}{4}, 100, -\frac{1256}{100}, 8^3, 0.67,\sqrt{\frac{1}{6}}\), no matter what numbers are in the sequence or how many!

But, we will need another sequence of numbers! The one that will learn to transform [1, 2, 3, 4] into the single number 3.14! Yes, we need another sequence! And this second sequence is the most important sequence.

This other one can also start with any numbers, but it helps a lot if, initially, it only has small numbers close to zero! And, this other sequence must have the same number of elements as the first one.

I chose the following: [0.9, 1.5, -0.1, 0.3].

I cannot overstate the importance of this sequence! It will hold the learning responsible for transforming [1, 2, 3, 4] into 3.14.

The four initial values that we see in the learning vector are not so important yet, but the four values we obtain at the end, when we finish playing, those are the most important ones!

To start, let’s combine [1, 2, 3, 4] with [0.9, 1.5, -0.1, 0.3]! That’s right! We are going to combine! And, combine linearly! In other words, we are going to treat these sequences as if they were vectors, and we are going to multiply one vector by the other, and see what happens:

Now, 4.8 is not yet 3.14, and not even close enough to 3.14!

So, we have to do something about it!

The initial version of our learning sequence has a negative number. It can be seen in the first line of 1. What if we tweak this number so that, in the end, we get a value smaller than 4.8 and closer to 3.14?!?

What could we do with -0.1 to transform 4.8 into a value closer to 3.14?

Notice that to get to 3.14 from 4.8, we could do 4.8 - 1.66! But how can we tweak the values of the vector [0.9, 1.5, -0.1, 0.3] to get the difference, -1.66, that we need in the final value?

Let’s "take a guess," as they say, and if it’s still not right, we’ll adjust it later!

So, let’s do the following: let’s change -0.1 to -0.3; and let’s also change 1.5 to 0.97.

Thus, our initial learning vector is already learning (or trying to!), as it went from [0.9, 1.5, -0.1, 0.3] to [0.9, 0.97, -0.3, 0.3]!

And, just look!

Notice that we only changed two of the four numbers in our initial learning vector. But we could have changed all of them!

What exactly did we do to those two values of the original vector? We subtracted 0.53 from 1.5 to get 0.97 in the second position, and we added -0.2 to -0.1 to get -0.3 in the third position. I found the -0.53 and the -0.2 through repeated attempts. I observed the effect that each attempt had in getting closer to or further from the desired result, until I found values increasingly closer to 3.14!

In this small example, we have just used our real intelligence to do something similar to what artificial intelligence models routinely do when they are learning!

They make small adjustments to a multitude of numbers distributed across many vectors. These vectors are usually very large, and the adjustments are made many, many times so that, each time, the small changes contribute to bringing the entire model to a response closer to the response that one wants the model to produce.

This book is about the beautiful, ingenious, and precise way in which such adjustments are calculated and applied!

Notice that we could have made adjustments to several vectors at the same time, each producing its own result! Our natural intelligence would find it tiring to deal with numbers in several vectors at a minute and tedious level of detail! But that is exactly what artificial intelligence models can do for us!

In fact, these models, also called artificial neural networks, do much more than just approximate numbers. They are capable of approximating curves and surfaces, and in general, they can approximate or map datasets of a complicated nature that describe, among other things, characteristics of human intelligence, such as vision, hearing, or speech.

I mentioned the concept of mapping above. In our little game, we created a mapping! A very simple one, but functional nonetheless. The mapping we created uses the learning we stored in the vector [0.9, 0.97, -0.3, 0.3] to create a functional relationship between one vector, [1,2,3,4], and the number 3.14, so that we can symbolize this functional relationship, y=f(x), like this: \( f(x)=[0.9, 0.97, -0.3, 0.3] \cdot x\), so that \( f([1,2,3,4])=[0.9, 0.97, -0.3, 0.3] \cdot [1,2,3,4]=3.14\)!

Get ready, because in the rest of the book we will see much more about these fabulous mappings and how to apply automatic mathematical optimization processes to them that will make artificial learning a true child’s play.

1.1. Giving Life to Equations

The Initial Game in Code

To start getting familiar with implementing the ideas, let’s replicate the "little game" from our Introduction using Python and the NumPy library.

The code below declares the two vectors we used: the input vector x (the data we want to transform) and the weight vector w (the "knowledge" of our equation). We will see the result of the initial linear combination, the "learning leap" with the already adjusted weights, and the formalization of our functional relationship into a function f(x).

Each line of code mirrors a step from our little game.

1import numpy as np

2

3# --- 1. The Starting Point ---

4

5# The input vector we want to "transform".

6x = np.array([1, 2, 3, 4])

7

8# The initial weight vector, our still-incorrect "knowledge".

9# In the book, we call this the "learning sequence".

10w_inicial = np.array([0.9, 1.5, -0.1, 0.3])

11

12# The linear combination (dot product) we did on paper.

13resultado_inicial = np.dot(x, w_inicial)

14

15print(f"Input Vector (x): {x}")

16print(f"Initial Weights (w_inicial): {w_inicial}")

17print(f"Initial Result (x . w_inicial): {resultado_inicial:.2f}")

18print("---")

19

20

21# --- 2. The Learning Leap ---

22

23# The weights after our "magical" adjustment, as we did in the game.

24# This is the final "knowledge" that the equation has learned.

25w_final = np.array([0.9, 0.97, -0.3, 0.3])

26

27# The new combination with the weights that have "learned" the task.

28resultado_final = np.dot(x, w_final)

29

30print(f"Final Weights (w_final): {w_final}")

31print(f"Final Result (x . w_final): {resultado_final:.2f}")

32print("---")

33

34

35# --- 3. Formalizing the Learning ---

36

37# We create a function f(x) that encapsulates the learned knowledge.

38# This function is our final "model", ready to be used.

39def f(input_vector):

40 # The learned weights are "stored" inside the function.

41 learned_weights = np.array([0.9, 0.97, -0.3, 0.3])

42 return np.dot(input_vector, learned_weights)

43

44# Using the function to prove that the transformation works.

45pi_calculado = f(x)

46

47print(f"Executing the learned function f(x):")

48print(f"f({x}) = {pi_calculado:.2f}")

49

50if np.isclose(pi_calculado, 3.14):

51 print("\nSuccess! Our equation has learned to calculate π!")The Extended Game: Simultaneous Adjustment

Now, let’s extend the game. What if we wanted a single set of "neurons" to learn to perform several tasks at the same time?

In the example below, we will use the same logic, but with a weight matrix W. Each row of the matrix W will act as a separate "neuron," responsible for a different transformation. Our goal is to adjust the three rows of W so that, from a single input vector x, our model simultaneously calculates approximations for three famous constants:

-

π (Pi) ≈ 3.14

-

e (Euler’s Number) ≈ 2.71

-

h (Planck’s Constant, scaled value) ≈ 6.63

The flow is the same: we will show the result with the initial (random) weights and then the result with the final, "mysteriously" adjusted weights, showing the power of simultaneous adjustment.

1import numpy as np

2

3# --- 1. Defining the Targets and Input ---

4

5# Our targets: the three constants we want the network to learn to generate.

6# Note: The value of h (6.626e-34) has been scaled to 6.63 for didactic purposes.

7alvos = np.array([3.14, 2.71, 6.63]).reshape(3, 1) # Column vector of desired responses 'z'

8

9# We will use the same input vector 'x' for all tasks.

10x = np.array([1, 2, 3, 4]).reshape(4, 1) # Column vector for input

11

12# --- 2. The Starting Point with Random Weights ---

13

14# The initial weight matrix W. Each row is a "neuron" with 4 weights.

15# 3 neurons (one for each constant), 4 weights each. Matrix shape: (3, 4).

16np.random.seed(42) # For reproducible results

17W_inicial = np.random.randn(3, 4) * 0.5 # Small random numbers

18

19# The initial linear combination using matrix multiplication (W . x)

20# The result is a vector of 3 elements, one per neuron.

21resultado_inicial = np.dot(W_inicial, x)

22

23print("--- Initial State ---")

24print(f"Input Vector x:\n{x.T}")

25print(f"\nInitial Weight Matrix W_inicial:\n{W_inicial}")

26print(f"\nInitial Result (W_inicial . x):\n{resultado_inicial}")

27print("As expected, the initial result is random and does not resemble our targets.")

28print("-" * 25)

29

30

31# --- 3. The Simultaneous Learning Leap ---

32

33# Here is the "magical" weight matrix after the adjustment.

34# Each row has been adjusted for its respective task.

35# (These values were pre-calculated so that the result is correct)

36W_final = np.array([

37 [0.9, 0.97, -0.3, 0.3], # Row that "learned" to calculate Pi

38 [0.1, 0.5, 0.8, -0.1525], # Row that "learned" to calculate 'e'

39 [1.0, 2.0, 0.9, -0.2425] # Row that "learned" to calculate 'h'

40])

41

42# The new combination with the weight matrix that has "learned" the three tasks.

43# Note, below, that the final results are very close to the desired targets. Although we could

44# have obtained the exact values for π ≈ 3.14, e ≈ 2.71, and h ≈ 6.63 by adequately

45# manipulating the weights of W_final in more adjustment steps, this is what is normally

46# obtained numerically in real projects: very good approximations.

47resultado_final = np.dot(W_final, x)

48

49print("\n--- Final State (After Learning) ---")

50print(f"Final Weight Matrix W_final:\n{W_final}")

51print(f"\nFinal Result (W_final . x):\n{resultado_final}")

52print(f"\nDesired Targets:\n{alvos}")

53

54# Verifying the success

55if np.allclose(resultado_final, alvos, atol=0.01):

56 print("\nSuccess! Our weight matrix has learned to perform three tasks simultaneously!")2. The Basic Description

Among artificial neural networks, the perceptron is the simplest.

The Perceptron is, so to speak, the building block of most neural network models.

Its structure is simple and easy to understand.

Mathematically, the Perceptron is an equation that learns.

But what does the Perceptron’s equation learn? Anything! It learns to provide the answers we want it to give for the elements of any given set of data or information. From this perspective, the Perceptron learns to create a point-to-point mathematical relationship between a set of data, \( D\), and another set of desired responses, \( Z\). This relationship takes the functional form \( P: D\longrightarrow Z\) and acts as the equation \( P(d)=z\) between specific points.

2.1. Weights and Biases, or Trainable Parameters

The Perceptron is based on a matrix of trainable parameters, \( W\), and also a vector of other trainable parameters, \( b\).

and

These parameters are called trainable because they change during the perceptron’s training, mysteriously accumulating the network’s learning until they reach an optimal value. At that point, the network is ready to perform the task it was created for.

The elements of the matrix \( W\) are the perceptron’s weights, while the vector \( b\) is the bias. The elements of \( b\) are the biases for each neuron.

To put it very simply and directly, the number of rows in \( W\) is the number of neurons in the Perceptron, and the number of columns is the number of weights for each neuron. All neurons (in the same layer) have the same number of weights.

I referred to the perceptron as the building block of most neural networks. Neural networks are built with basic structures called layers, and the Perceptron is this layer in a vast majority of network architectures. Furthermore, the Perceptron itself can have layers, as we will see in Chapter 3.

Note that if the Perceptron consisted of a single neuron, then the matrix \( W\) representing this layer would, in fact, be a row-vector! That is, \( W\) would be a \( 1\times m\) matrix:

Just to give an example, as incredible as it may seem, even Deep Learning projects with more complex and deep architectures that perform binary classification are likely using a Perceptron with a single row of weights as their final layer. This would be the case for a network whose goal is to read bone x-rays and determine whether they indicate fractures. Such a well-developed network could be trained to identify fissures or fractures that are difficult for the human eye to detect.

2.2. The General Form of the Perceptron

A perceptron, like any other neural network, is created to perform a task. It must learn to perform its task. It must produce a result, \( z\), that corresponds to each element, \( x\), both in a set of data or information, \( D\). We say that \( D\) is a set of vectors or tensors and that the perceptron is trained on this set. We will talk more about \( D\) when we get to Section Training.

If \( x\in D\) is one of the training vectors, then the perceptron’s response, \( y\), to this vector is:

The vector \( x\) is one of the perceptron’s inputs, and \( y\) is the corresponding output.

Any of the expressions in (6) are sometimes simply called the perceptron’s linearity.

2.3. Two Alternative Representations

Below, I will briefly present two alternative ways the Perceptron can be represented. The reader may encounter these in other books, and knowing they exist can broaden one’s ability to manipulate the mathematical tools that describe and model it. I include them here in passing, but we will not use them in this book.

The \( xW\) Form of the Matrix Product

Note that the first equation of 6 could have been written with the vector \( x\) multiplying the matrix \( W\) from the left, like this:

in which case the vector \( x^T\) would be a row-vector, the columns of \( W^T\) would be the Perceptron’s neurons, while the number of rows in \( W^T\) would be the number of weights in each neuron.

Weights and Bias in the Same Matrix

We can embed the bias vector of each layer into its respective weight matrix, making it the last column of these matrices. This possibility is already present in the Perceptron’s equations. Consider, for example, the third equation in 6 and notice that it can be rewritten as follows:

with \( b_i=w_{i(m+1)}\) and with \( x_{m+1}=1\).

Now, note that the second equation in 8 is the same as:

This way, we would only need to embed the scalar unit as the last position of each incoming vector, \( x\), so that it now has \( m+1\) elements.

In this way, the equation above is simply:

2.4. Activation Functions

It is extremely common to pass each of the values of \( y\) to the same activation function. This activation function is also known as a "non-linearity," because it "breaks," in a way, the linear behavior produced in 6. It can take one of several commonly used forms. For now, let’s just designate it with a symbol: \( a\). Thus, in its most general form, the single-layer perceptron is:

This last expression might seem a bit confusing at the moment, perhaps because of the expressions inside the brackets, but don’t be alarmed. It displays the mathematical symbols that mirror the conceptual structure of a single-layer Perceptron. It also shows how the vector \( x\) is "absorbed" and processed by the network. The vectors \( W_i\) are the rows of \( W\) and the scalars \( b_i\) are elements of \( b\). See how the vector \( x\) is processed by each of the rows of \( W\). The equation shows how the signal \( x\) "flows" through the perceptron until it is transformed into its response, \( P\).

Try to firmly grasp the fact that \( a\) is a vector and that its elements are functions whose independent variables are, respectively, the elements of the vector \( y\). In the Subsection below, I have placed a table with some well-known and commonly used activation functions.

Some Activation Functions

The table below displays some of the most well-known activation functions. They are shown with the notation we use throughout the book, revealing their nature as real-valued functions of a real domain, with the exception of the Softmax function. The Softmax function uses all components of a linearity vector to generate a percentage relative to the \( i\)-th component.

| Name | Formula |

|---|---|

Sigmoid |

\( a_i(y_i)=\frac{1}{1+e^{-y_i}}\) |

Hyperbolic Tangent |

\( a_i(y_i)=\tanh(y_i)\) |

Softmax |

\( a_i(y) = \frac{e^{y_i}}{\sum_{k=1}^{n}e^{y_k}}\) |

ReLU |

\( a_i(y_i) = \max\{0,y_i\}\) |

2.5. Training

We have already said that a perceptron must learn to perform a certain task and that it is trained on a set of data or information, \( D\). This set can be considered a set of ordered pairs, \( (x,z)\in D\), such that \( z\in Z\subset D\) and \( x\in X\subset D\) are, respectively, the set of desired responses and the set of vectors representing any domain of things we are interested in relating to the desired responses.

We have already seen that \( x\) is a vector. The second component of the ordered pair, \( (x,z)\), is the response we want the perceptron to learn to give to the input vector \( x\). The ordinate \( z\) can be a scalar number, a vector, a matrix, or a tensor. This will depend on how we mathematically encode the task. In this book, our desired responses, \( z\), will only be scalars or vectors.

Initially, the perceptron gives some arbitrary response, \( P\), to the vector \( x\). Throughout the training, this response gets closer to the correct or desired response, \( z\). We check this approximation with a Cost Function, which we will denote with the symbol \( E\) (we will see more about it in Section The Cost Function), which tells us how far or how close \( P(x)\) is from the desired response \( z\). Throughout the training, the value of the Cost Function decreases because \( P(x)\) becomes increasingly close to \( z\). Our goal during training is to make the error go to zero, meaning that the equality \( P(x)=z\) becomes true, or almost true, as a sufficiently small error is usually enough.

From a certain point of view, this entire book is about how to reintegrate into the Perceptron, during training, the information contained in the error, \( E\), to make the Perceptron more accurate in its task. That is, to create the relationship we desire, \( P(x_i)=z_i\).

There are several functions that can be used as a cost or loss function. The choice depends on the project and sometimes on the preference of the person training the network. In Section Some Cost Functions, there is a table with some useful and commonly used cost functions. The important thing to know is that the Cost Function, whatever it may be, must comply with the mathematical definition of a norm. This is a topic we won’t delve into in this book, but the interested reader can find the definition in Appendix Norm on a Vector Space, along with a small proof that if a norm is zero, then its argument must also be zero.

We will see in Section Updating the Trainable Parameters that the process we will use to approximate \( P(x)\) and \( z\) is based on the gradient of the Error Function. This process, called Gradient Descent or Stochastic Gradient Descent, gradually indicates the direction of the lowest value of \( E\) and, thus, also indicates the path to a smaller separation between \( P\) and \( z\).

We can say that the Perceptron is a mapping that learns to give an appropriate response \( z\) to each \( x\) in a learning process that is carried out over one or several training sessions. Usually, several! In a training session, the Perceptron receives all the elements \( x\) from \( D\), one after the other, and for each \( x\), the corresponding \( P(x)\) is calculated. After that, the error function is calculated on \( P(x)\) and \( z\), so we can express it as: \( E(P,z)\). As many training sessions are performed as necessary to make \( E(P,z)\) sufficiently close to zero. This is the reason for requiring the Error or Cost Function to be a norm, because then, when \( E(P,z)\longrightarrow 0\), it will also be true that \( P-z\longrightarrow 0\), meaning the network’s response is becoming equal to the desired response.

3. Artificial Learning

3.1. Optimization

Artificial learning is an optimization process.

What is optimized in artificial learning? A function that is usually called a Cost Function, Loss Function, or even Error Function! I personally call it, in this context of artificial learning, a Pedagogical Function, since it measures how far the Perceptron’s response is from the desired response, and by this means, we know if the network is learning or not. It is from the derivation of the Loss Function that learning happens.

Anyone who has ever derived a function to then find its maximum or minimum is in a perfect position to understand how artificial learning happens.

Learning occurs in one or more training sessions, where the Perceptron’s trainable parameters are repeatedly updated (See Section Training). These parameters are updated at each step of the training, that is, after each training batch is presented to the network. We will see more about training in batches in the chapter Training in Batches.

The description of how artificial learning happens is the most important and interesting part of neural networks in this author’s opinion. Without this, there is no machine learning.

3.2. The Cost Function

When we go to school, our learning is measured by assessments. The learning of neural networks is also measured by performance evaluations.

The Perceptron’s school is the training session.

Just as a final school grade is obtained from a formula, neural networks also use formulas that "grade" their performance.

In the case of neural networks, such formulas are known as cost functions, loss functions, or error functions. In this book, I refer to them much more often as error functions. They, in fact, measure the error made by the network when trying to predict a response to a corresponding input.

The error function can have several forms, but I will not address any specific form now, as we are interested in how its general form fits into the learning formulas. For now, we will just symbolize any error function with the letter \( E\). In Subsection Some Cost Functions, right below, you can find a table with some cost functions.

There is much to say about \( E\), but for now, let’s stick to the operational aspects that make it possible for the perceptron to learn.

The function \( E\) takes the Perceptron’s output as its argument. So,

But, you see, the perceptron’s output depends on its trainable parameters, meaning \( E\) also depends on the weights and bias. So, it is more common to write:

This notation is very useful because the perceptron’s learning depends on the derivative of \( E\) with respect to its weights and bias.

Here, we need to consider the compositional structure of the error function,

and keep in mind that \( a\) and \( y\) are vectors—the data from 6 and 11—and that \( E\) is a real-valued function.

Some Cost Functions

Below are some of the most well-known Cost Functions (with a vector domain).

| Name | Formula |

|---|---|

Mean Squared Error |

\( E=\frac{1}{n}\sum_{i=1}^n(a_i-z_i)^2\) |

Mean Absolute Error |

\( E=\frac{1}{n}\sum_{i=1}^n \begin{vmatrix}a_i-z_i\end{vmatrix}\) |

Cross-Entropy |

\( E=-\frac{1}{n}\sum_{i=1}^n z_i\cdot \log a_i\) |

Binary Cross-Entropy |

\( E=-\frac{1}{n}\sum_{i=1}^n \begin{bmatrix}z_i\cdot \log a_i+(1-z_i)\cdot \log (1-a_i)\end{bmatrix}\) |

3.3. Gradient of the Error with Respect to W

Let’s calculate the derivative of the error function with respect to the Perceptron’s weights. This derivative is also known as the gradient of the Error.

We know that \(\frac{\partial E}{\partial a}\) is the gradient of \( E\) with respect to the activation vector \( a\), because \( E\) is a real-valued function with a vector domain. Thus, \(\frac{\partial E}{\partial a}=\nabla_a E\).

The derivative \(\frac{\partial a}{\partial y}\) generates a matrix. This comes from the fact that both \( a\) and \( y\) are vector functions. See appendix Derivative of Vector Functions for more details on derivatives of vector functions.

The derivatives \(\frac{da_i}{d y_i}\) cannot yet be calculated or fully reduced. We don’t yet have any definite form for a. This will happen when we are dealing with specific examples or architectures.

If we analyze equation 11, we will see that each \( a_i\) depends only on \( y_i\). Therefore, we must have \(\frac{d a_i}{d y_j}=0\) if \( i \ne j\). Consequently, \(\frac{\partial a}{\partial y}\) will be a diagonal matrix. The elements, \(\frac{da_i}{d y_i}\), of this diagonal matrix will depend on the specific form of \( a\).

The differential \(\frac{\partial y}{\partial W}\) has the form of a column-vector with \( n\) elements. However, these elements are, in turn, \( n\times m\) matrices.

Now, notice that each element \( i\) of the column-vector on the right side of 16 is the derivative of a real-valued function whose arguments coincide only with the \( i\)-th row of \( W\). These real-valued functions are defined in equation 6, from which we know that \( y_i(W_i)=\sum_{j=1}^{m} w_{ij}x_j +b_i\) (See Appendix The Derivatives of \( y_i\) to review the procedure for deriving this equation). Therefore, the elements of the column-vector are matrices with null entries, with the sole exception of their \( i\)-th row.

Column-vectors or row-vectors of matrices will appear many times in this presentation. This is due to the fact that we are deriving the error, \( E\), with respect to the entire weight matrix at once.

Thus, the formula 15, which calculates the partial derivative of the perceptron’s error \( E\) with respect to its weights \( W\), is:

3.4. Gradient of the Error with Respect to the bias b

We now need to calculate the derivative of E with respect to the bias vector, \( b\).

From 6, we see that the biases are embedded at the deepest level of the perceptron, along with the weights.

Before, we considered the error as a function of only the weights. Now, let’s consider it as a function of only the biases.

Based on the calculations we’ve already done in the previous section, we can very simply write:

If necessary, see Appendix The Derivatives of \( y_i\) for more considerations on the calculation of \(\frac{\partial y}{\partial b}\).

3.5. Some Cost and Activation Functions and Their Derivatives

| Name | Formula | Derivative \(\left(\frac{d a_i}{d y_i}\right)\) |

|---|---|---|

Sigmoid |

\( a_i(y_i)=\frac{1}{1+e^{-y_i}}\) |

\(\begin{aligned} &\frac{e^{-y_i}}{(1+e^{-y_i})^2}\\ &\text{or}\\ &a_i(1-a_i) \end{aligned}\) |

Hyperbolic Tangent |

\( a_i(y_i)=\tanh(y_i)\) |

\(\begin{aligned} &1-tanh^2 y_i\\ &\text{or}\\ &1-a_i^2 \end{aligned}\) |

Softmax |

\( a_i(y) = \frac{e^{y_i}}{\sum_{i=1}^{n}e^{y_i}}\) |

\(\begin{aligned} &\frac{e^{y_i}}{\sum_{j=1}^{n}e^{y_j}} \left( 1- \frac{e^{y_i}}{\sum_{j=1}^{n}e^{y_j}} \right )\\ &\text{or}\\ &a_i(1-a_i) \end{aligned}\) |

ReLU |

\( a_i(y_i) = \max\{0,y_i\}\) |

\(\max\{0,1\}\) |

| Name | Formula | Derivative \(\left(\frac{d E}{d a_i}\right)\) |

|---|---|---|

Mean Squared Error |

\( E=\frac{1}{n}\sum_{i=1}^n(z_i-a_i)^2\) |

\( -\frac{2(z_i-a_i)}{n}\) |

Mean Absolute Error |

\( E=\frac{1}{n}\sum_{i=1}^n \begin{vmatrix}a_i-z_i\end{vmatrix}\) |

\(\begin{equation*} \begin{aligned} &\frac{1}{n} \frac{a_i-z_i}{\begin{vmatrix}a_i-z_i\end{vmatrix}}\\ &\text{or}\\ &\frac{1}{n} \begin{cases} 1 & \text{if}\ \ a_i>z_i \\ -1 & \text{if}\ \ a_i<z_i \\ \nexists & \text{if}\ \ a_i=z_i \end{cases} \end{aligned} \end{equation*}\) |

Cross-Entropy |

\( E=-\frac{1}{n}\sum_{i=1}^n z_i\cdot \log a_i\) |

\( -\frac{z_i}{na_i}\) |

Binary Cross-Entropy |

\( E=-\frac{1}{n}\sum_{i=1}^n \begin{bmatrix}z_i\cdot \log a_i +(1-z_i)\cdot \log (1-a_i)\end{bmatrix}\) |

\(\frac{a_i-z_i}{a_i(1-a_i)}\) |

3.6. Updating the Trainable Parameters

Finally, we’ve arrived where we wanted: \( \frac{\partial E}{\partial W}\) and \( \frac{\partial E}{\partial b}\) will be used to update the perceptron’s weights and bias. The process where this is done is called backpropagation and is based on the Stochastic Gradient Descent technique. This technique, in turn, is based on the fact that \( \frac{\partial E}{\partial W}\) is a gradient vector and, therefore, always points in the direction of the greatest rate of change of \( E\). Consequently, its negative, \( -\frac{\partial E}{\partial W}\) (see formulas 21 and 23), will point in the direction of the smallest variation. I won’t go into further detail on this point here, but the interested reader can find a few other interesting and pertinent observations about our use of it in Appendix Some Observations on the Gradient. I will just mention that the aforementioned negative sign is very important. If you have ever coded this formula, say in Python or TensorFlow or any other language or framework, to train a Perceptron, but mistakenly used a positive sign instead of a negative one, you may have noticed that the error made by the Perceptron actually only increases instead of decreasing!

At this moment, we have everything necessary to present the formula that allows learning to happen. This formula has a simplicity and beauty that is only matched by its power to make the Perceptron’s learning possible.

During a training session, the Perceptron’s weights are updated many times. Each update happens at a moment, \( t\), of the training. As the training evolves, the weights are altered in search of better performance, that is, in search of a lower cost, \( E\). At a given moment, \( t\), of the training, the perceptron has the weight matrix \( W_t\), which is updated by adding \( \Delta W_t\). The result becomes the new current weight matrix of the Perceptron, \( W_{t+1}\).

where

The symbol \( \eta\) is called the learning rate. It is a parameter whose importance lies in dictating the pace of the training, as it allows for adjusting the "speed" of the training. However, it is difficult to know what the optimal speed is for each step of a neural network’s training, although there are general guidelines and useful calculation methods, which we will not discuss at this time. It is a somewhat delicate parameter to handle, as are other defining parameters of neural networks. In practice, small values like \( \eta=0.01\) or \( \eta=0.001\) are always used as a first alternative. Other approaches alter the value of \( \eta\) throughout the training so that its value decreases as the training progresses.

The bias update is done with formulas very similar to those for updating the weights:

where

3.7. Giving Life to Equations

Artificial Learning: A First Example.

This notebook implements our first functional Perceptron in its simplest possible form: a single layer with a single neuron. We will use only Python and NumPy to see the theory from Chapters 1, 2, and 3 in its purest form.

The objective is to apply backpropagation to a real and quite rudimentary image classification problem. As our architecture is the simplest that exists, we will see how the general learning equation 17 simplifies in a beautiful and intuitive way. The calculation of the weights' gradient (∂E/∂W), for example, will not have that clumsy column-vector of matrices, as we will see.

1import numpy as np

2import matplotlib.pyplot as plt

3import requests

4from PIL import Image

5from io import BytesIO

6

7# Function to download and prepare an image from a URL

8def download_and_prepare_image(url):

9 response = requests.get(url)

10 img = Image.open(BytesIO(response.content))

11 img = img.convert('L')

12 img_array = np.array(img) / 255.0

13 img_array = np.where(img_array > 0.7, 1, 0)

14 return img_array.flatten()

15

16# URLs of the "little faces" images

17base_url = 'https://raw.githubusercontent.com/aleperrod/perceptron-book/2e9af4436dd7317ea18fbcae583429cccc944ef0/carinhas/'

18urls = [

19 base_url + 'gross1.png', base_url + 'gross2.png', base_url + 'gross3.png',

20 base_url + 'thin1.png', base_url + 'thin2.png', base_url + 'thin3.png'

21]

22

23X_train = np.array([download_and_prepare_image(url) for url in urls])

24z_train = np.array([0, 0, 0, 1, 1, 1])

25

26# Test images

27url_teste_thin = base_url + 'thin4.png'

28url_teste_gross = base_url + 'gross4.png'

29x_teste_thin = download_and_prepare_image(url_teste_thin)

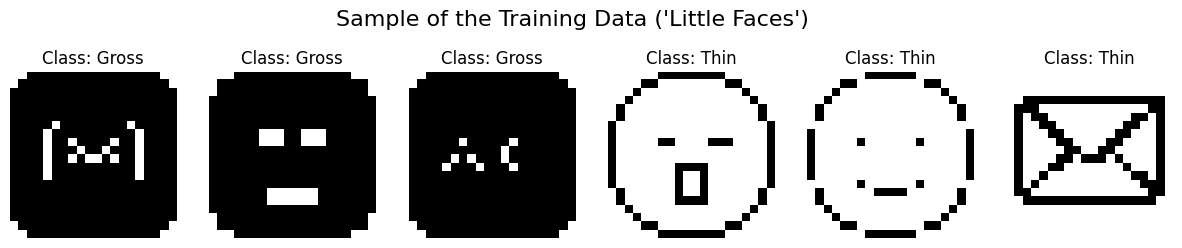

30x_teste_gross = download_and_prepare_image(url_teste_gross)1# Visualizing our training data

2fig, axes = plt.subplots(1, 6, figsize=(15, 3))

3for i, ax in enumerate(axes):

4 ax.imshow(X_train[i].reshape(20, 20), cmap='gray') # Reshape error, corrected to 20x20

5 ax.set_title(f"Class: {'Gross' if z_train[i] == 0 else 'Thin'}")

6 ax.axis('off')

7plt.suptitle("Sample of the Training Data ('Little Faces')", fontsize=16)

8plt.show()

Step 2: Defining the Tools (Functions)

As we are building everything "by hand," we define our tools as separate functions. Note that, as our Perceptron has only one neuron, the output P and the error E are single values (scalars), which simplifies their derivatives.

1# Activation Functions and their derivatives

2def sigmoid(y):

3 return 1 / (1 + np.exp(-y))

4

5def sigmoid_derivative(a):

6 return a * (1 - a)

7

8# Cost Function (Mean Squared Error) and its derivative

9# z and P are scalars here

10def mean_squared_error(z, P):

11 return (z - P)**2

12

13def mean_squared_error_derivative(z, P):

14 return 2 * (P - z) # Keeping the factor of 2 for fidelity to the formal derivative

15

16# Function to initialize the parameters of our neuron

17def initialize_parameters(input_dim):

18 # W is a 1D vector (not a matrix) with 400 weights.

19 W = np.random.randn(input_dim) * 0.01

20 # b is a single number (scalar)

21 b = 0.0

22 return W, bStep 3: Training "by Hand" (Example by Example)

This is the heart of our notebook. The training loop implements the theory of artificial learning. For each image x and its label z, the process is:

-

Forward Pass: We calculate the output

P, which in this case is a single number. -

Backpropagation: We calculate the gradients. Here lies the beauty of the simplification:

-

The error "delta"

dE/dyis a scalar, as there is only one linearity,y. -

The gradient of the weights,

dE/dW, is calculated by multiplying this scalar delta by the input vectorx. The equation∂E/∂W = (∂E/∂a * ∂a/∂y) * xmanifests here. The expression 17 simplifies because, in this example, the weight matrixWreduces to a row-vector!

-

-

Update: We adjust

Wandbusing the calculated gradients.

1# --- Hyperparameters and Initialization ---

2learning_rate = 0.1

3epochs = 30

4W, b = initialize_parameters(X_train.shape[1])

5

6cost_history = []

7

8print("Starting the training in Python/NumPy (corrected version)...")

9for i in range(epochs):

10 total_epoch_cost = 0

11 # The inner loop iterates over each example individually

12 for x, z in zip(X_train, z_train):

13

14 # --- 1. Forward Pass ---

15 # y = W . x + b (dot product between two vectors -> scalar)

16 y = np.dot(W, x) + b (1)

17 # P = a(y) (activation on a scalar -> scalar)

18 P = sigmoid(y) (2)

19

20 # --- 2. Cost Calculation ---

21 cost = mean_squared_error(z, P)

22 total_epoch_cost += cost

23

24 # --- 3. Backpropagation (with scalars) ---

25 # Initial delta: dE/dy = dE/dP * dP/dy (product of scalars)

26 dE_dP = mean_squared_error_derivative(z, P)

27 dP_dy = sigmoid_derivative(P)

28 dE_dy = dE_dP * dP_dy

29

30 # Gradients of the parameters

31 # dE/dW = dE/dy * d(y)/dW = dE/dy * x

32 # This is the simplified form! It is the multiplication of a scalar (dE_dy) by a vector (x).

33 dE_dW = dE_dy * x

34 # dE/db = dE/dy * d(y)/db = dE/dy * 1

35 dE_db = dE_dy

36

37 # --- 4. Parameter Update ---

38 W -= learning_rate * dE_dW

39 b -= learning_rate * dE_db

40

41 # End of epoch

42 average_cost = total_epoch_cost / len(X_train)

43 cost_history.append(average_cost)

44 if (i + 1) % 5 == 0:

45 print(f"Epoch {i + 1}/{epochs} - Average Cost: {average_cost.item():.6f}")

46

47print("Training finished!")| 1 | Here, the linearity is \( y=W\cdot x+b\), which for our present case is \( y=\begin{bmatrix}w_{1}&,\dots , & w_{400}\end{bmatrix}\cdot\begin{bmatrix} x_1 , \dots , x_{400}\end{bmatrix}^t+b\) (the superscript \( t\) denotes the transpose from row to column), which in turn is equivalent to \( y=w_{1}x_1+\dots +w_{400}x_{400} +b\). |

| 2 | We return the sigmoid activation function \( P=a(y)=\frac{1}{1+e^{-y}}=\frac{1}{1+e^{-(W\cdot x+b)}}=\frac{1}{1+e^{-(w_{1}x_1,\dots , w_{400}x_{400} +b)}}\). |

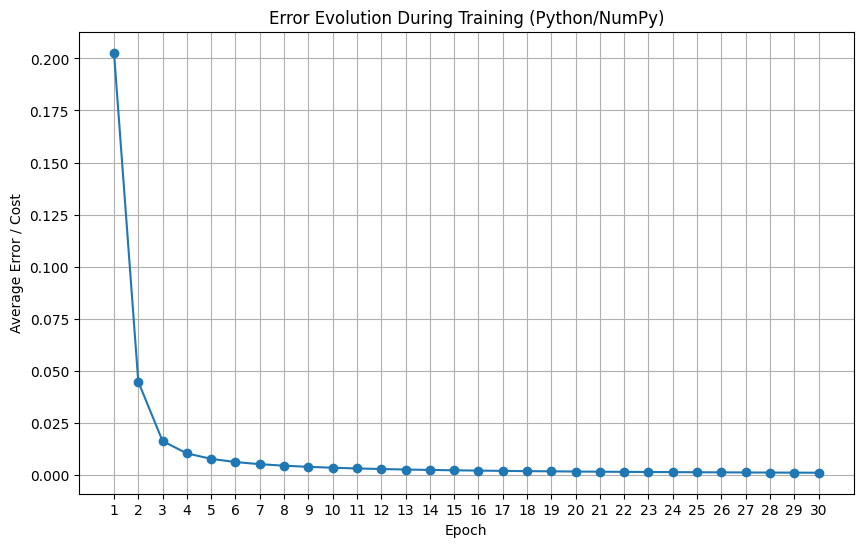

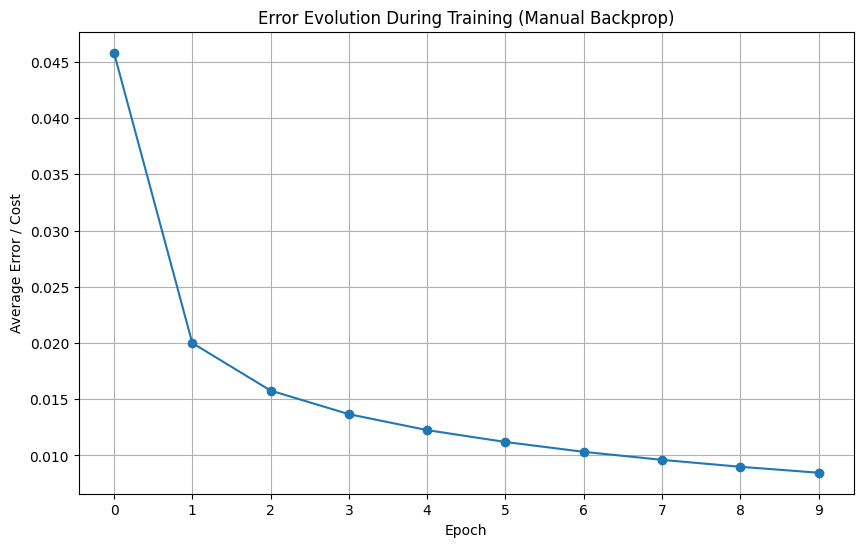

Step 4: Analyzing the Training Results

One thing is to run the training, another is to know if it worked. The graph below shows the evolution of the average cost over the epochs.

A descending curve is the sign we are looking for: it indicates that the Perceptron was, with each pass through the data, adjusting its weights and becoming progressively better at its task, meaning the error was decreasing.

1# Plot the cost function graph to see if the network learned

2plt.figure(figsize=(10, 6))

3plt.plot(cost_history, marker='o', linestyle='-')

4plt.xlabel("Epoch")

5plt.ylabel("Average Error / Cost")

6plt.title("Error Evolution During Training (Python/NumPy)")

7plt.grid(True)

8plt.xticks(np.arange(len(cost_history)), np.arange(1, len(cost_history) + 1))

9plt.show()

Step 5: Testing the Model in Practice

After training, the true test of a neural network is its performance on data it has never seen before. The cell below defines a function that takes a test image, applies the forward pass with the weights W and the bias b that we have just trained, and displays the image with the model’s prediction alongside the real label.

The output of the Sigmoid function (P) is a number between 0 and 1. We can interpret it as the neuron’s "confidence" that the image belongs to the "Thin" class (label 1). We use a threshold of 0.5 to make the final decision.

Run the cell to see if the Perceptron gets it right!

1# Function to test the trained model on a new image

2def test_model_numpy(image, real_label_str, W, b):

3 # The Forward Pass is the same as inside the training loop

4 y = np.dot(W, image) + b

5 P = sigmoid(y)

6

7 # The classification is based on a threshold of 0.5

8 final_prediction = "Thin" if P > 0.5 else "Gross"

9

10 # Displaying the results

11 print(f"--- Testing image: '{real_label_str}' ---")

12 print(f"Neuron output (P): {P.item():.4f}")

13 print(f"Final prediction: {final_prediction}")

14

15 plt.imshow(image.reshape(20, 20), cmap='gray')

16 plt.title(f"Prediction: {final_prediction} | Real: {real_label_str}")

17 plt.axis('off')

18 plt.show()

19

20# Testing with the two images we set aside

21print("Starting tests with unseen data...\n")

22test_model_numpy(x_teste_thin, "Thin", W, b)

23print("\n" + "="*40 + "\n")

24test_model_numpy(x_teste_gross, "Gross", W, b)A Real Lesson on Artificial Learning

Our perceptron, with a single neuron, learned to distinguish images with a strong predominance of black from images with a strong predominance of white. The former have white strokes on a black background, and the others have, on the contrary, black strokes on a white background. But how, exactly, did it do this?

Thanks to the simplicity of a "toy" artificial neural network, composed of a single neuron, we can give an answer well-rooted not only in the architecture of such a network but in its numerical operation.

Our perceptron is composed of a single linear combination, \( y=w_{1}x_1+\dots +w_{400}x_{400} +b\), whose result, \( y\), is given to a sigmoid activation function.

On one hand, the weights \( W=[w_1, \dots , w_{400}]\) are initialized, each one, with small values, W = np.random.randn(input_dim) * 0.01.

The "trick," on the other hand, lies in transforming our images into vectors of zeros and ones, img_array = np.where(img_array > 0.7, 1, 0), that is, for each element of \( x=[x_1, \dots , x_{400}]\), we have \( x_i\in \{0,1\}\), where for the images with a black background, \( i's\) with \( x_i=0\) predominate, while for those with a white background, \( i's\) with \( x_i=1\) predominate.

Now, we need to see that \( 0\le \sum_{i=0}^{400} x_i \le 400\), because, in the unlikely case that all positions of the vector \( x\) were null, then this summation would be null, while in the equally unlikely case that all positions were equal to one, the sum would be 400!

We can visually inspect and verify that the positions of \( W\), after training, are within a tiny interval, contained within the interval \( (-1, 1)\), that is, being a small number, the exaggerated inequality \( -1\ll w_i \ll 1\) holds.

Thus, the training led the positions of \( W\) to assume values with magnitudes contained in this interval, such that, for example, if the image has a predominance of zeros, then we expect that:

This value of \( y\), calculated for the linear combination of weights and positions of the image with a predominance of zeros, agrees quite well with the interval we just saw that the \( w_i\) must be in. The few 1s that appear in the vector \( x\) are multiplied by small \( w_i\), mostly negative, or with the sum of the negative values exceeding the positive values in magnitude, and whose sum ends up having the result we arrived at in 24, which also agrees with the lower half of the steep "s" shape of the sigmoid function being on the negative side of the horizontal axis.

4. Multiple Layers

A Perceptron can have more than one layer, and it usually does, especially in Deep Learning models.

It’s possible to "stack" layers! This is done to improve learning.

The more a perceptron correctly associates the training data, \( x\), with its corresponding desired response, \( z\), the better it is learning. Strategically increasing the number of network weights by increasing the number of layers can improve training performance. That is, \( E\) decreases, which translates to improved learning—meaning more pairs \( (x,z)\in D\) are correctly associated by the perceptron.

4.1. The Propagation of a Signal x Through the Network’s Layers

An input signal, \( x\), will "flow" through the network’s layers, entering the first layer, passing through each one until it exits through the activation functions of the final layer.

We have already seen that a single-layer perceptron is defined by its weights and bias, so we can view it as the object:

Let’s represent the stacking of layers, that is, the juxtaposition of several single-layer perceptrons, simply like this:

where

The superscripts in 27 indicate the layer number to which the weights and bias belong.

4.2. A 2-Layer Perceptron

For now, let’s consider a two-layer perceptron, \( P=P_1\rightarrow P_2\). Perceptron 1 has \( n\) neurons, while perceptron 2 will have \( p\) neurons. We will consider an input vector, \( x\), with \( m\) elements.

Perceptron \( P_1\) will receive the signal \( x\), but \( P_2\) will receive the output of \( P_1\), that is, the activation functions, \( a^1\), of \( P_1\).

The output of \( P_2\) is delivered to the error function. In other words, the activation functions, \( a^2\), of \( P_2\) are the arguments of the Error Function.

The Equation of a 2-Layer Perceptron

Let’s write a simplified version for the equation of \( P\). I will use the same symbol \( P\), as in 11, to designate the network’s output. For greater clarity, we will use the symbol \( \circ\), which is sometimes used to represent the composition of functions.

The expressions in 28 display details of the compositional structure of \( P\). Continuing,

The expressions in 29 are a continuation of the development started in 28, and they show how the input signal, \( x\), is absorbed into the linearity, \( y^1\), and how this linearity is subsequently absorbed by the activation vector of \( P_1\). Note in the first and last lines how \( y^1\) and \( a^1\) are column-vectors.

Meanwhile, the expressions in 30 show how the activations, \( a^1\), from the first layer enter the linearity, \( y^2\), of layer 2.

The Error Function of a 2-Layer Perceptron

Thus, let’s write the error function of \( P\), making its compositional structure explicit.

Again, the superscripts in 32 designate the layer number to which \( W\), \( b\), or \( a\) respectively belong. This equation gives us the way the error function of \( P\) is composed.

We could express it in a more incomplete and less informative, but more compact way like this:

Although none of the expressions in 33 makes the location and relationships of the weights and bias explicit, they allow one to grasp the depth and order of the composition at a single glance.

Preparing to Derive the Error Function of a 2-Layer Perceptron

The learning of a perceptron happens through the adjustment of its weights, and this adjustment is made at the end of a process that repeats many times and begins with calculating the derivative of the current state of the error function with respect to all the weights of a network, \(\frac{\partial E}{\partial W}\).

-

It is important, now, to emphasize that:

-

The adjustment of the weights happens during a process called backpropagation or backward propagation. When a signal \( x\) is presented to the network, \( P(x)\), it "flows" forward through the network, going from the first layer to the last. On the other hand, when the adjustment of the trainable parameters is made, the adjustment signal flows or propagates backward. This is related to the fact that when we derive, we derive backward. The derivation is applied to the outermost layers of the network first, that is, it is applied to the last layers first, and from there, it retrogresses to the initial layer. This will become very clear when we explain the entire process in its generality, starting from Section The Derivative of the Error with Respect to the Weights of Any Given Layer.

-

We want the derivatives of \( E\) with respect to the trainable parameters, \( W=\{W^1, W^2\}\), of \( P=P_1\rightarrow P_2\) so that we can backpropagate the error and perform the perceptron’s learning.

-

These parameters are located at different depths within the network. In our present case, \( W^2\) is in the second or last layer, while \( W^1\) are the weights of the first layer.

-

The derivation and backpropagation have a direction: they go from the last layer to the first.

-

Thus, the calculation of the derivative of a two-layer perceptron is done in two parts. First, we calculate \(\frac{\partial E}{\partial W^2}\) and only then do we calculate \(\frac{\partial E}{\partial W^1}\).

-

The Rates of Change of the Error

We just mentioned that there is a set of all weights \( W=\{W^1 ,W^2\}\). The goal is to derive with respect to all the Perceptron’s weights, but in stages, so that it’s possible to calculate the updates for the weights of \( W^2\) and then those for \( W^1\), and subsequently do the same for the biases.

Derivative of the Error with Respect to the Layer 2 Weights

Without further ado, let’s move on to the derivation of \( E(W^1,W^2,b^1,b^2)\) with respect to the weights and bias of layer 2: \( W^2\) and \( b^2\). The following calculations and comments on their details have already been made in Section Gradient of the Error with Respect to W. Therefore, here, equation 15 is rewritten, adapting its notation to this 2-layer case. In both cases, we are dealing with the last layer of the network.

Note the subtle difference between 17 and the third line of 34. In 17, the rightmost column-vector of matrices contained the components of \( x\) along the single non-zero row of each matrix in the column-vector. Now, the equation in the third line of 34 contains, in those same positions, the elements of the activation vector, \( a^1\), from layer 1.

Note, also, that the derivation process \( \frac{\partial E}{\partial W^2}\) extends only to layer 2, where the weights \( W^2\) are embedded in the linearity \( y^2\). So, taking into account the second line of 28, we can emphasize that:

Finally, note that the last line of 34 can be further developed to obtain a final form that does not contain that clumsy and hard-to-manipulate column-vector of matrices.

As we see below, the final form of \( \frac{\partial E}{\partial W^2}\) is quite reduced and uses the outer product operation, which we denote with the symbol \( \otimes\).

The reader, like this author, probably does not find it natural to have, in the last line of 37, the vector of derivatives of \( E\) succeeding the diagonal matrix of the derivatives of \( a^2\). This is a small price to pay for reducing the form and increasing the ease of manipulation of 34. The commutation involved there comes from the transposition performed on the second line. This transposition affects the diagonal matrix and \( \nabla_{a^2} E\), with the diagonal matrix being identical to its transpose.

On one hand, libraries for matrix and vector manipulation, such as Numpy or Tensorflow, provide a native method for the outer product. On the other hand, coding a column-vector of matrices, although not difficult, can be time-consuming in its writing and preliminary tests for correctness and proper functioning.

But, finally, the execution of the product, which is inside the parentheses in the second to last or last lines of 37, leads us to a column-vector whose scalar entries are products of derivatives that can be arranged to display the correct order of factors, as seen in the column-vector in the first line.

Derivative of the Error with Respect to the Layer 2 Biases

Now, the derivative \( \frac{\partial E}{\partial b^2}\) has the same form as the first and second lines of 19, with the exception of the superscripts, and it also only reaches the first part of the network.

Derivative of the Error with Respect to the Layer 1 Weights

Now, let’s calculate the derivative with respect to \( W^1\) and, right after, comment on its elements.

We have already mentioned that the linearities \( y^1(x)\) and \( y^2(a^1)\) absorb their respective incoming signals, \( x\) and \( a^1\), in the same way. This can be seen clearly in 29 and 31. They are very similar.

But, in the calculation of \( \frac{\partial E}{\partial W^1}\), they end up being derived with respect to different elements of P’s structure. The linearity \( y^2\) is derived with respect to the activations of layer 1, while \( y^1\) is derived with respect to all the weights, \( W^1\), of its own layer, 1. This is how the objective of deriving \( E\) with respect to \( W_1\) is achieved.

For this reason, \( \frac{\partial y^2}{\partial a^1}\) is a \( p\times n\) matrix, while \( \frac{\partial y^1}{\partial W_1}\) is a column-vector with \( n\) elements, each of which is a \( n\times m\) matrix.

By the way, the \( p\times n\) matrix resulting from the calculation of \( \frac{\partial y^2}{\partial a^1}\) is precisely the weight matrix \( W^2\). This can be seen in 41.

Once again, we can simplify the final expression of the derivative of \( E\). Let’s consider the following development starting from the second to last line of 41.

Such a development will also lead to a form involving an outer product with the incoming signal, which in this case is \( x\). So, the calculation can continue as done below, in 43.

Performing the indicated sum, we get:

Note that in the transition from the second to the third lines of 44, we recognized that the expression being transposed is the very same one that appears on the fourth line! From there, it was just a matter of "unpacking" the already known factors. For the moment, we will leave it as it is. But we will soon see that this expression can be further worked on and that it will be part of the recursive methodology we will use to calculate the rates of change of the Error in multi-layer Perceptrons.

We will see that, in perceptrons with more than 2 layers, the pattern \( \frac{\partial a^{l+1}}{\partial y^{l+1}}\cdot W^{l+1}\cdot \frac{\partial a^l}{\partial y^l}\), where \( l\) is the number of a layer, repeats itself. There will always be \( L\) repetitions of this pattern, nested between the initial \( \nabla_{a^L} E\) and the final \( \frac{\partial y^1}{\partial W^1}\), for a perceptron with L layers. This observation will help us produce a general formula for calculating the derivatives of the error function, \( E\), for a perceptron with any number of layers.

Derivative of the Error with Respect to the Layer 1 Biases

Finally, the derivative of E with respect to the biases of the 1st layer. Again, the calculation with respect to the biases closely follows the calculation with respect to the weights of the same layer, only being simpler, since \( \frac{\partial y^1}{\partial b^1}\) produces a unitary matrix.

4.3. A Multi-Layer Perceptron

Now that we have gained a better understanding of a Perceptron’s structure, let’s quickly write the equation for one, \( P\), with any number of layers, \( L\). We again use the symbol \( \circ\) for function composition.

The ellipsis, naturally, indicates that any number of layers can be in its place, and each pair \( a^l\circ y^l\) indicates the elements of layer \( l\), namely, the activation vector whose argument is its linearity vector, \( a^l( y^l)\).

With the exception of the linearity of layer 1, every other linearity, \( L\ge l\ge 2\), has the following form:

where \( n_l\) and \( p_l\) are, respectively, the number of rows and columns of \( W^l\). Since the number of columns of matrix \( W^l\) and the number of rows of vector \( a^{l-1}\) coincide, the number of elements in \( a^{l-1}\) is also \( p_l\).

The linearity of layer 1 has a very similar form to the other linearities, with the exception of its incoming signal, \( x\).

4.4. The Error Function of a Multi-Layer Perceptron

The error function for the case of \( L\) layers is the same as in the other cases. It takes the Perceptron’s output, \( P\), as its argument.

4.5. The Derivative of the Error with Respect to the Weights of Any Given Layer

Next, we will display the formula for the derivative of \( E\) with respect to the weights of a layer \( l\). Its form is perfectly understandable when considering expression 49, because from this, we know we have to use the chain rule as the derivation method to obtain:

while the derivative with respect to the weights of layer 1, \( W^1\), is:

It turns out that, as beautiful and elegant as 50 and 51 may be, in many cases, they could not be calculated in their entirety at each training step of a multi-layer Perceptron!

The more layers a perceptron has, the longer 50 and 51 become. Let’s remember that each derivative in these formulas is a matrix or vector, or even a vector of matrices, whose dimensions can take on very large values. This makes using these formulas in their current form impractical.

Consider two successive calculations, that of \( \frac{\partial E}{\partial W^{l+1}}\) and \( \frac{\partial E}{\partial W^{l}}\). If we were to use formula 50 for these two calculations, we would have calculated all the first \( L-(l+1)+1=L-l\) rates of change from 50 twice!

Fortunately, there is a practical solution to this problem.

4.6. Practical Process for Updating Weights and Biases

The solution to the problem presented in the previous section is to calculate the derivative of the weights of a layer, \( l\), by leveraging all the calculations already made for layers \( L\) down to \( l+1\). At each step down through the layers, the last performed calculation is stored in memory.

Derivative of E with Respect to the Weights

This is done in the following way. Consider the following expressions, all equivalent to the derivative of E with respect to the weights of layer L:

So that, from the second line of 53, we necessarily have 54, which is the part that is important for us to save, for now, in memory for the next calculations.

Now, pay attention to what I will do with 54, because I’m going to multiply it by:

to obtain:

Analyze the left side of the first equation of 56 carefully and make sure that it really reduces to the left side of the fourth equation, as it is vital to understand that the matrix multiplication we just performed really produces the derivative of E with respect to the weights of the next layer of the network, from last to first, namely \( \frac{\partial E}{\partial W^{L-1}}\).

On the right side, in the fourth line of 56, we have the part that we must save in memory to perform the next calculation of the derivative of \( E\), which will be with respect to \( W^{L-2}\).

First of all, let’s use 54 to write:

The second equation in 57 results from performing the matrix product \( \frac{\partial E}{\partial a^L}\frac{\partial a^L}{\partial y^L}\) and shows the recursive nature of the derivative of \( E\) with respect to the Perceptron’s linearities, as it shows the dependency that \( \frac{\partial E}{\partial y^{L-1}}\) has on \( \frac{\partial E}{\partial y^L}\). The method we are developing is a recursive method.

This practical method works because what we are saving in memory is only the result of the calculations and not the matrices whose product gives this result. And it continues this way until we calculate the derivative of the Error with respect to the weights of layer 1.

So, reasoning inductively, whenever we have calculated the derivative of the Error with respect to the weights of a layer \( l+1\), we will have already obtained the derivative of the Error with respect to the linearity of this layer:

Then, at this point, we calculate the quantity corresponding to 55, but now with respect to layer \( l\) and in two steps. First, we calculate only the quantity:

whose product with 58 produces:

Note that in 58, we have already performed the matrix product \( \frac{\partial E}{\partial y^{l+2}}\frac{\partial y^{l+2}}{\partial a^{l+1}}\frac{\partial a^{l+1}}{\partial y^{l+1}}\) that is indicated in 60.

Finally, we multiply both sides of the second equation in 60 by \( \frac{\partial y^{l}}{\partial W^{l}}\) to obtain:

Derivative of E with Respect to the Biases

The process of deducing the derivative of the error function with respect to the biases of any given layer is basically the same as we have followed so far for the derivation with respect to the weights.

Following the same procedures, it can be seen that, also in the case of biases, the derivative of \( E\) with respect to the linearity of layer \( l\), i.e., \( \frac{\partial E}{\partial y^l}\), is the very same one we found in 60. There should be no surprise about this fact, since the weights and biases of layer \( l\) are embedded in the single and same linearity of this layer of the network.

Finally, to find \( \frac{\partial E}{\partial b^l}\), we multiply, as before, equation 60, but now by \( \frac{\partial y^l}{\partial b^l}\) to obtain:

But we have already seen in 19 that we will always have:

so that the second equation in 62 is simply identical to \( \frac{\partial E}{\partial y^l}\) as expressed below:

The General Formula

Phew! Now, we are in a position to summarize what we have deduced so far into a general formula for calculating the derivative of the Error with respect to the linearity of a layer \( l\). With it, it will become very simple to calculate the derivative of the Error with respect to the weights and bias of any layer, following the process we described.

Note that the case \( l=L\) comes directly from 54, while the case \( L>l\ge 1\) is the expression to the right of 60.

According to 64, the equation above is the exact expression that calculates \( \frac{\partial E}{\partial b^{l}}\) for any layer of a Perceptron.

To find \( \frac{\partial E}{\partial W^l}\), the equations in 61 tell us that we just need to multiply 65 on both sides by \( \frac{\partial y^l}{\partial W^l}\) and arrange the left side of the expression to arrive at:

4.7. Analyzing the Dimension of the Matrices for \( \frac{\partial E}{\partial W^l}\) and \( \frac{\partial E}{\partial b^l}\)

Let’s do a quick analysis of the dimension of the matrices involved in 66 and then explicitly perform the products indicated in it. This will give us a picture of the final result of the calculations we have been performing up to this point. Furthermore, this result will be used in 20 for updating the weights, and for this, it is necessary that the dimensions of \( W^l\) and \( \frac{\partial E}{\partial W^l}\) are equal.

Considering the structural elements and the layers indicated in 66, let’s assume that layer \( l+1\) has \( n\) neurons, layer \( l\) has \( p\) neurons, and that the number of elements in the incoming vector, \( s\), is \( m\). The vector \( s\) is the signal that enters layer \( l\). This signal can be either the activation vector of layer \( l-1\), or it can be the vector \( x\) on which the network is being trained. If layer \( l\) is the first layer of the network, then \( s=x\), otherwise, \( s=a^{l-1}\). For this reason, I will use the symbol \( s\) for the remainder of this Section to indicate that we could be dealing with any of these cases.

In this case, \( \frac{\partial E}{\partial a^l}\) is a row vector of \( p\) elements, \( \frac{\partial a^{l}}{\partial y^{l}}\) is a square matrix \( p\times p\), while \( \frac{\partial y^l}{\partial W^l}\) is a column-vector with \( p\) positions whose elements are matrices with the same dimension as \( W^l\), i.e., \( p\times m\).

Now, \( \frac{\partial E}{\partial y^{l+1}}\) is a row vector of \( n\) elements, \( \frac{\partial y^{l+1}}{\partial a^{l}}\) is an \( n\times p\) matrix, and last but not least, \( \frac{\partial E}{\partial W^l}\) is a matrix with the dimensions of \( W^l\).

If necessary, consult Section Derivative of Vector Functions in the Appendix for a brief explanation of the dimension of objects resulting from the derivation of vector functions.

So, in the case where \( l=L\), we have a product of three matrices with the following dimensions: \( 1\times p\), \( p\times p\), and \( p\times 1\). This is the minimum we would expect for the product to be possible, which is, the number of columns of the matrix on the left being equal to the number of rows of the matrix on the right.

In the case where \( L>l\ge 1\), we have four matrices with the following dimensions, from left to right: \( 1\times n\), \( n\times p\), \( p\times p\), and finally, \( p\times 1\). Again, we have the minimum we would need.

In both cases, the final dimension \( p\times 1\) is that of a column-vector with \( p\) rows and \( 1\) column, whose \( p\) positions are matrices that have the dimension of \( W^l\), as we have seen several times now. Thus, in both cases, the \( 1\times 1\) dimension of the result of the matrix products is not a scalar, but rather a single matrix that, as we’ve seen, has the dimension of the weight matrix of layer \( l\), i.e., \( p\times m\).

So, when \( l\) is the last layer of the network:

It is easy to see that the last line in 68, and indeed its entire development since 67, is essentially the one that was started in 36, with the exception of the symbols for the incoming signal and the layer number.

The outer product is not as common as the normal product of vectors, but its concept is just as simple. I present its definition in Appendix Outer Product.

Now, let’s move on to the explicit form of \( \frac{\partial E}{\partial W^l}\) for any layer \( l\), except the last one.

In equation 39 and the following, we had already seen that the derivative of a linearity with respect to its input signal is the weight matrix of that linearity’s layer. So, in 69, we have already used the fact that \( \frac{\partial y^{l+1}}{\partial a^l}=W^{l+1}\). Continuing,

Up to this point, we have performed the indicated product of matrices or vectors, from left to right. The reader should follow these developments very closely, as they are responsible for producing the mathematical forms that make learning possible in principle. The rightmost column-vector is, in fact, the vertical vector of matrices we have been talking about. These matrices will appear explicitly shortly below.

Next, we will re-encounter the row vector we found in the second expression of 70, but this time, it will be enclosed within a transpose operation. This transposed expression is responsible for the final form we will arrive at below.